Anyone can download, but practically no one can run it.

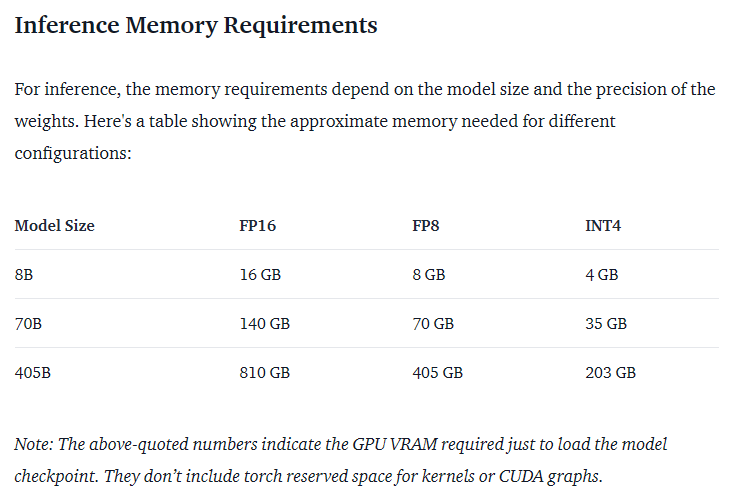

With the absolute highest memory compression settings, the largest model that you can fit inside of 24GB of VRAM is 109 billion parameters.

Which means even with crazy compression, you need at the very least, ~100GB of VRAM to run it. That’s only in the realm of the larger workstation cards which cost around $24,000 - $40,000 each, so y’know.

Ouch.

You can get usable performance on a CPU with good memory bandwidth. Apple studios are the best way to get that right now, but a good Epyc with 256GB of RAM works too.

Of course, you could also just run 5 GPUs

“usable” sure if you want to wait 10 minutes a word.

lol it’s just a program that tries to do everything with any data you throw at it (whatever happened to UNIX philosophy…) but using insane amounts of computing power. General purpose models will always, ALWAYS eventually run into the wall of the second law of thermodynamics.

LLM extensions… yeah we had a tool for that. It’s called an OS and programs.

Until we don’t overcome the limitations of current computers, replacing code with training data and hoping it will be as good and efficient is a naive pipe dream.I’m envisioning downloading this, then my pc starts to smell bad and spits at me a lot