Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this.)

yet another whistleblower is dead; this time, it’s the OpenAI copyright whistleblower Suchir Balaji

deleted by creator

OpenAI whistleblower found dead in San Francisco apartment.

Thread on r/technology.

edited to add:

From his personal website: When does generative AI qualify for fair use?

Let them fight. https://openai.com/index/elon-musk-wanted-an-openai-for-profit/

Saw something about “sentiment analysis” in text. While writers have discussed “death of the author” and philosophers and linguists have discussed what it even means to derive meaning from text, these fucking AI dorks are looking at text in a vacuum and concluding “this text expresses anger”.

print("I'm angry!")the above python script is angry, look at my baby skynet

Openai are you angry? Yes -> it is angry. No -> it is being sneaky, and angry.

Us ADHD people really have to get our rejection sensitivity under control, I tell ya what.

I am deeply hurt by this post. I thought we were friends here. (/s)

Oh no, are you mad at me? (j/k!!)

Please dont mock me like that.

sentiment analysis is such a good example of a pre-LLM AI grift. every time I’ve seen it used for anything, it’s been unreliable to the point of being detrimental to the project’s goals. marketers treat it like a magic salve and smear it all over everything of course, and that’s a large part of why targeted advertising is notoriously ineffective

It’s built upon such a nonsensical ontology. The sentiment expressed in a piece of language is at least partially a social function, which is why I can add the following

I AM BEYOND FUCKING LIVID AT EVERYONE IN THIS FUCKING INSTANCE

to this response and no one will actually assume I’m really angry (I am though, send memes).

Edit: not one meme. Not. One.

Edit2: thank you for the memes, @[email protected]. This one is my favorite. It feels Dark Souls-y.

Image description

Live crawfish with arms spread in front of bowl of cooked crawfish with caption “Stand amongst the ashes of a trillion dead souls and ask the ghosts if honor matters”.

it’s a quote from mass effect. also, shrimp welfare

I read this as shrimp warfare and while I’m not sure about WW3, the fifth or sixth world war will be fought between the shrimp and the crows over rulership of the earth.

The basement in Caanan House if God were Cajun.

found a new movie plot threat https://www.science.org/doi/10.1126/science.ads9158

funded by open philanthropy, but not only and also got some other biologists onboard. 10 out of 39 authors had open philanthropy funding in the last 5 years so they’re likely EAs. highly speculative as of now and not anywhere close to being made, as in we’ll be dead from global warming before this gets anywhere close from my understanding. also starting materials would be hideously expensive because all of this has to be synthetic and enantiopure, and every technique has to be remade from scratch in unnatural enantiomer form. it even has LW thread by now hxxps://www.lesswrong.com/posts/87pTGnHAvqk3FC7Zk/the-dangers-of-mirrored-life

it hit news https://www.nytimes.com/2024/12/12/science/a-second-tree-of-life-could-wreak-havoc-scientists-warn.html https://www.theguardian.com/science/2024/dec/12/unprecedented-risk-to-life-on-earth-scientists-call-for-halt-on-mirror-life-microbe-research

I read the headline yesterday and thought, “This is 100% fundraising bullshit.”

This strikes me as being exact same class of thing OpenAI does when they pronounce that their product will murder us all.

What do we call this? Marketerrorism?

i see how it’s critihype but i don’t understand where’s money in this one

CRITIHYPE, thank you! I couldn’t find the word!

If I had to guess a motive, it would be to bring mirror biology out of the obscurity of pure research (who funds that anymore?) and to instead plant it firmly into the popular zeitgeist as a “scary thing” that needs to be defended against. This can lead to it becoming a trendy topic, and therefore fundable by grant-awarding agencies.

as in, funding for writing ratty screeds? because they specifically want to cut funding to d-proteins and such. this also works for fundraising

Maybe I’m being too cynical. It wouldn’t be the first time this week that someone drew a spooky picture, would it?

nooo waay

Mirror bacteria? Boring! I want an evil twin from the negaverse who looks exactly like me except right hande-- oh heck. What if I’m the mirror twin?

I’m definitely out of my depth here, but how exactly does a lefty organism bypass immune responses and still interact with the body? Seems like if it has a way to mess up healthy cells then it should have something that antibodies can connect to, mirrored or not. Not that I’m arguing we shouldn’t be careful about creating novel pathogens, but other than being a more flashy sci-fi premise I’m not really seeing how it’s more dangerous than the right-handed version.

Also I think this opens up a beautiful world of new scientific naming conventions:

- Southpaw Paramecium

- Lefty Naegleria

- Sinister Influenza

they way i understand it, because immune system is basically constantly fuzzing all potentially new things, what is important is how antigen looks like on the surface. what it is made from matters less, and whether aminoacids there are l- (natural) or d- (not) it shouldn’t matter that much, antibodies are generated for nonnatural achiral things all the time including things like PEG and chloronitrobenzene. then complement system puts holes in bacterial membrane and that’s it, it’s not survivable for bacterium and does not depend on anything chiral. normally all components are promptly shredded, it’s a good question if that would happen too but, like - this might not matter too hard - there’s a way for immune system to smite this thing

the potential problem is that peptides made from d-aminoacids are harder to cut via hydrolases and it’s a part of some more involved immune response idk details. there’s plenty of stuff that’s achiral like glycerol, glycine, beta-alanine, TCA components, fatty acids that mirrored bacteria can feed on without problems. some normal bacteria also use d-aminoacids so normal l-aminoacids should be usable for d-protein bacteria. there’s also transaminase that takes d-aminoacids and along with other enzymes it can turn these into l-aminoacids. but even more importantly we’re perhaps 30 years away from making this anywhere close to feasible, it’s all highly speculative. there’s a report if you want to read it https://stacks.stanford.edu/file/druid:cv716pj4036/Technical Report on Mirror Bacteria Feasibility and Risks.pdf

also look up cost of these things. unnatural aminoacids, especially these with wrong conformation but otherwise normal are expensive. l-tert-leucine is unnatural but can be made in biotechnological process, so it’s cheaper. for example on sigma-aldrich, d-glutamine costs 100x more than l-glutamine, and for sugars it’s even worse because these have more chiral centers

besides, it’s not really worth it probably? it will take decades and cost more than ftx wiped out. other than making it work just to make it work, all the worthwhile components can be made synthetically, maybe there’s some utility in d-proteins, more likely d-peptides, tiny amounts of these can be made by SPPS (for screening) and larger in normal chemical synthesis (for use). these might be slightly useful if slowed down degradation of peptides could be exploited in some kind of pharmaceutical, but do you know how we can make it work in other way? don’t put amide bonds there in the first place and just make a small molecule pharmaceutical like we can do (as in, organic chemists)

another part of the concern is that these things could transform organic carbon in form unusable to other organisms. but nature finds a way, and outside of fires etc, there are bacteria that feed on nylon and PET, so i think this situation won’t last long

Jfc, when I saw the headline I thought this would be a case of the city being too cheap to hire an actual artist and instead use autoplag, but no. And the guy they commissioned isn’t even some tech-brain LARP’ing as an artist, he has 20+ years of experience and a pretty huge portfolio, which somehow makes this worse on so many levels.

OK so we’re getting into deep rat lore now? I’m so sorry for what I’m about to do to you. I hope one day you can forgive me.

LessWrong diaspora factions! :blobcat_ohno:

https://transmom.love/@elilla/113639471445651398

if I got something wrong, please don’t tell me. gods I hope I got something wrong. “it’s spreading disinformation” I hope I am

My pedantic notes, modified by some of my experiences, so bla bla epistemic status, colored by my experiences and beliefs take with grain of salt etc. Please don’t take this as a correction, but just some of my notes and small minor things. As a general ‘trick more people into watching into the abyss’ guide it is a good post, mine is more an addition I guess.

SSC / The Motte: Scott Alexander’s devotees. once characterised by interest in mental health and a relatively benign, but medicalised, attitude to queer and especially trans people. The focus has since metastasised into pseudoscientific white supremacy and antifeminism.

This is a bit wrong tbh, SSC always was anti-feminist. Scotts old (now deleted) livejournal writings, where he talks about larger discussion/conversation tactics in a broad meta way, the meditations on superweapons, always had the object level idea of attacking feminism. For example, using the wayback machine, the sixth meditation (this is the one I have bookmarked). He himself always seems to have had a bit of a love/hate relationship with his writings on anti-feminism and the fame and popularity this brought him.

The grey tribe bit is missing that guy who called himself grey tribe in I think it was silicon valley who wanted to team up with the red tribe to get rid of all the progressives, might be important to note because it looks like they are centrist, but shock horror, they team up with the right to do far right stuff.

I think the extropianists might even have different factions, like the one around Natasha Vita-More/Max More. But that is a bit more LW adjacent, and it more predates LW than it being a spinoff faction. (The extropian mailinglist came first iirc). Singularitarians and extropianists might be a bit closer together, Kurzweil wrote the singularity is near after all, which is the book all these folks seem to get their AI doom ideas from after all. (if you ever see a line made up out of S-curves that is from that book. Kurzweil also is an exception to all these people as he actually has achievements, he build machines for the blind, image recognition things, etc etc, he isn’t just a writer. Nick Bostrom is also missing it seems, he is one of those X-risk guys, also missing is Robin Hanson, who created the great filter idea, the prediction markets thing, and his overcoming bias is a huge influence on Rationalism, and could be considered a less focused on science fiction ideas part of Rationalism, but that was all a bit more 2013 (Check the 2013 map of the world of Dark Enlightenment on the Rationalwiki Neoreaction page).

“the Protestants to the rationalists’ Catholicism” I lolled.

Note that a large part of sneerclubbers is (was) not ex rationalists, nor people who were initially interested in it, it actually started on reddit because badphil got too many rationalists suggestions that they created a spinoff. (At least so the story goes) so it was started by people who actually had some philosophy training. (That also makes us the most academic faction!)

Another minor thing in long list of minor things, might also be useful to mention that Rationalwiki has nothing to do with these people and is more aligned with the sneerclub side.

There are also so many Scotts. Anyway, this post grew a bit out of my control sorry for that, hope it doesn’t come off to badly, and do note that my additions make a short post way longer so prob are not that useful. Don’t think any of your post was misinformation btw (I do think that several of these factions wouldn’t call themselves part of LW, and there is a bit of a question who influenced who (the More’s seem to be outside of all this for example, and a lot of extropians predate it etc etc. But that kind of nitpicking is for people who want to write books on these people).

E: reading the thread, this is a good post and good to keep in mind btw. I would add not just what you mentioned but also mocking people for personal tragedy, as some people end/lose their lives due to rationalism, or have MH episodes, and we should be careful to treat those topics well. Which we mostly try to do I think.

wasnt that grey tribe guy just balaji srinivasan https://newrepublic.com/article/180487/balaji-srinivasan-network-state-plutocrat

it’s certainly the vector from which I’d first heard about that term, but hadn’t realized balaji shillrinivasan was original enough to have come up with that himself

He didnt, the term is from slatestarcodex, who he follows/ed

ah, figures. certainly does have more slatescott sauce on it

Yes forgot the name, that old Moldbug penpal.

deleted by creator

This is great. The “diaspora” framing makes me want there to be an NPR style public interest story about all this. The emotional core would be about trying to find a place to belong and being betrayed by the people you thought could be your friends, or something.

Adam Christopher comments on a story in Publishers Weekly.

Says the CEO of HarperCollins on AI:

“One idea is a “talking book,” where a book sits atop a large language model, allowing readers to converse with an AI facsimile of its author.”

Please, just make it stop, somebody.

Robert Evans adds,

there’s a pretty good short story idea in some publisher offering an AI facsimile of Harlan Ellison that then tortures its readers to death

Kevin Kruse observes,

I guess this means that HarperCollins is getting out of the business of publishing actual books by actual people, because no one worth a damn is ever going to sign a contract to publish with an outfit with this much fucking contempt for its authors.

Casting Harlan Ellison as the Acausal Robot God is just the best.

just delivered a commission on why bitcoin is very like hawk tuah

this season’s word is: kleptokakistocracy

Can we all take a moment to appreciate this absolutely wild take from Google’s latest quantum press release (bolding mine) https://blog.google/technology/research/google-willow-quantum-chip/

Willow’s performance on this benchmark is astonishing: It performed a computation in under five minutes that would take one of today’s fastest supercomputers 1025 or 10 septillion years. If you want to write it out, it’s 10,000,000,000,000,000,000,000,000 years. This mind-boggling number exceeds known timescales in physics and vastly exceeds the age of the universe. It lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse, a prediction first made by David Deutsch.

The more I think about it the stupider it gets. I’d love if someone with an actual physics background were to comment on it. But my layman take is it reads as nonsense to the point of being irresponsible scientific misinformation whether or not you believe in the many worlds interpretation.

Does it also destroy all the universes where the question was answered wrong?

One of these days we’ll get the quantum bogosort working.

“Quantum computation happens in parallel worlds simultaneously” is a lazy take trotted out by people who want to believe in parallel worlds. It is a bad mental image, because it gives the misleading impression that a quantum computer could speed up anything. But all the indications from the actual math are that quantum computers would be better at some tasks than at others. (If you want to use the names that CS people have invented for complexity classes, this imagery would lead you to think that quantum computers could whack any problem in EXPSPACE. But the actual complexity class for “problems efficiently solvable on a quantum computer”, BQP, is known to be contained in PSPACE, which is strictly smaller than EXPSPACE.) It also completely obscures the very important point that some tasks look like they’d need a quantum computer — the program is written in quantum circuit language and all that — but a classical computer can actually do the job efficiently. Accepting the goofy pop-science/science-fiction imagery as truth would mean you’d never imagine the Gottesman–Knill theorem could be true.

To quote a paper by Andy Steane, one of the early contributors to quantum error correction:

The answer to the question ‘where does a quantum computer manage to perform its amazing computations?’ is, we conclude, ‘in the region of spacetime occupied by the quantum computer’.

Tangentially, I know about nothing of quantum mechanics but lately I’ve been very annoyed alone in my head at (the popular perception of?) many-world theory in general. From what I’m understanding about it, there are two possibilities: either it’s pure metaphysics, in which case who cares? or it’s a truism, i.e. if we model things that way that makes it so we can talk about reality in this way. This… might be true of all quantum interpretations, but many-world annoys me more because it’s such a literal vision trying to be cool.

I don’t know, tell me if I’m off the mark!

There’s a whole lot of assuming-the-conclusion in advocacy for many-worlds interpretations — sometimes from philosophers, and all the time from Yuddites online. If you make a whole bunch of tacit assumptions, starting with those about how mathematics relates to physical reality, you end up in MWI country. And if you make sure your assumptions stay tacit, you can act like an MWI is the only answer, and everyone else is being

un-mutualirrational.(I use the plural interpretations here because there’s not just one flavor of MWIce cream. The people who take it seriously have been arguing amongst one another about how to make it work for half a century now. What does it mean for one event to be more probable than another if all events always happen? When is one “world” distinct from another? The arguments iterate like the construction of a fractal curve.)

Humans can’t help but return to questions the presocratics already struggled with. Makes me happy.

Unfortunately “states of quantum systems form a vector space, and states are often usefully described as linear combinations of other states” doesn’t make for good science fiction compared to “whoa dude, like, the multiverse, man.”

“lends credence”? yeah, that smells like BS.

some marketing person probably saw that the time estimate of the conventional computation exceeded the age of the universe multiple times over, and decided that must mean multiple universes were somehow involved, because big number bigger than smaller number

It reads to me like either they got lucky or encountered a measurement error somewhere, but the peer review notes from Nature don’t show any call outs of obvious BS, though I don’t have any real academic science experience, much less in the specific field of quantum computing.

Then again, this may not be too far beyond the predicted boundaries of what quantum computers are capable of and while the assumption that computation is happening in alternate dimensions seems like it would require quantum physicists to agree on a lot more about interpretation than they currently do the actual performance is probably triggering some false positives in my BS detector.

The peer reviewers didn’t say anything about it because they never saw it: It’s an unilluminating comparison thrown into the press release but not included in the actual paper.

Maybe I’m being overzealous (I can do that sometimes).

But I don’t understand why this particular experiment suggests the multiverse. The logic appears to be something like:

- This algorithm would take a gazillion years on a classical computer

- So maybe other worlds are helping with the compute cost!

But I don’t understand this argument at all. The universe is quantum, not classical. So why do other worlds need to help with the compute? Why does this experiment suggest it in particular? Why does it make sense for computational costs to be amortized across different worlds if those worlds will then have to go on to do other different quantum calculations than ours? It feels like there’s no “savings” anyway. Would a smaller quantum problem feasible to solve classically not imply a multiverse? If so, what exactly is the threshold?

I mean, unrestricted skepticism is the appropriate response to any press release, especially coming out of silicon valley megacorps these days. But I agree that this doesn’t seem like the kind of performance they’re talking about wouldn’t somehow require extra-dimensional communication and computation, whatever that would even mean.

I mean, unrestricted skepticism is the appropriate response to any press release, especially coming out of silicon valley megacorps these days.

Indeed, I’ve been involved in crafting a silicon valley megacorp press release before. I’ve seen how the sausage is made! (Mine was more or less factual or I wouldn’t have put my name on it, but dear heavens a lot of wordsmithing goes into any official communication at megacorps)

these are some silly numbers. if all this is irreversible computation and if landauer principle holds and there’s no excessive trickery or creative accounting involved, then they’d need to dissipate something in range of 4.7E23 J at 1mK, or 112 Tt of TNT equivalent (112 million Mt)

(disclaimer - not a physicist)

The computation seems to be generating a uniformly random set and picking a sample of it. I can buy that it’d be insanely expensive to do this on a classical computer, since there’s no reasonable way to generate a truly random set. Feels kinda like an unfair benchmark as this wouldn’t be something you’d actually point a classical computer at, but then again, that’s how benchmarks work.

I’m not big in quantum, so I can’t say if that’s something a quantum computer can do, but I can accept the math, if not the marketing.

How do you figure? It’s absolutely possible in principle that a quantum computer can efficiently perform computations which would be extremely expensive to perform on a classical computer.

your regular reminder that the guy with de facto ownership over the entire Rust ecosystem outside of the standard library and core is very proud about being in Peter Thiel’s pocket (and that post is in reference to this article)

e: on second thought I’m being unfair — he owns the conferences and the compiler spec process too

I didn’t think today was gonna be a day where I’d read about sounding then breaking glass rods but here we are.

it’s an unhinged story he keeps telling on the orange site too, and I don’t think he’s ever answered some of the obvious questions:

- why is this a story your family tells their kids in apparent graphic detail?

- you’re still fighting the soviets? you don’t have any more up to date bad guys to point at when people ask you why you’re making murder drones and knife missiles?

- are you completely sure this happened instead of something normal, like your communist great grandfather making up a story and sticking with it cause he was terrified of the House Unamerican Activities Committee? maybe this one is just me

maybe this is a cautionary tale about telling your kids cautionary tales

maybe it’s a mutation of a story on how breakup of yugoslavia started (nsfw)

So it turns out the healthcare assassin has some… boutique… views. (Yeah, I know, shocker.) Things he seems to be into:

- Lab-grown meat

- Modern architecture is rotten

- Population decline is an existential threat

- Elon Musk and Peter Thiel

How soon until someone finds his LessWrong profile?

the absolute state of american politics: rentseeker ceo gets popped by a libertarian

Someone else said it, but for someone completely accustomed to a life of easy privilege, having it suddenly disappear can be utterly intolerable.

We should expect more of this to come. The ascendant right wing is pushing policies that only deliver for people who are already stinking rich. Even if 99% of those who vote that way go along with the propaganda line in the face of their own disappointment, that’s still a lot of unhappy people, who are not known for intellectual consistency or calm self-reflection, in a country overflowing with guns. All it takes is one ammosexual who decides that his local Congressman has been co-opted by the (((globalists))), you know?

there’s gotta be many more than 1% of right to far-right wingers clocking that that ambient suckiness is result of republican policies or profit squeezing, it’s just that i expected them to be way more apathetic

qanon and weird nazis were around for some time and they definitely can get worse, but i don’t think it’s it

also, killing CEOs is more of traditional activity of more ideologically consistent far left groups, like RAF. can’t have shit in late capitalism

RAF’s aims were explicitely accelerationist - their terror would provoke a ferocious repressional response that would open the eyes of the masses to the repressive government and trigger a revolution.

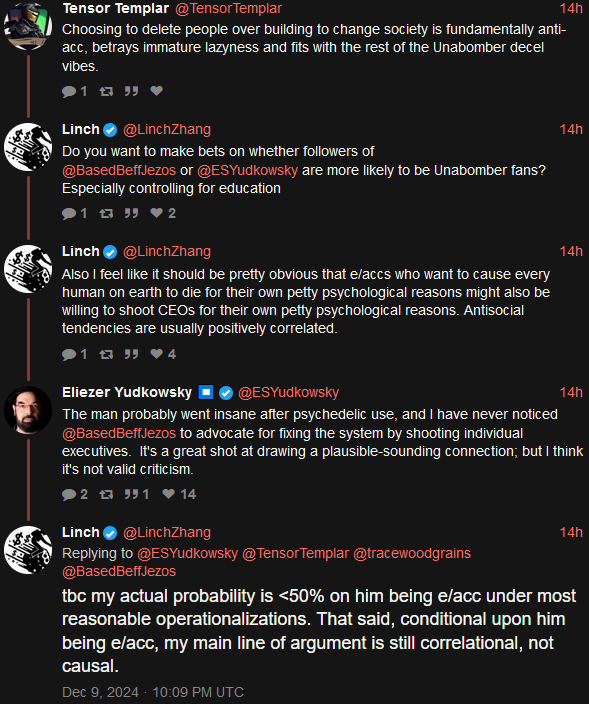

StRev was calling him TPOT adjacent and woodgrains was having a bit of a panic over it.

“Our righteous warriors are only supposed to kill brown people and women, not captains of industry!!”

dust specks vs CEOs

Thats a very real viewpoint being pushed by the Thiel adjacent far righters.

tfw the Holy Book (Atlas Shrugged) was misinterpreted.

According to his goodreads he had not read it yet.

It’s so embarrassing to watch upper-middle class (at best!) rationalists get their panties in a twist over Luigi. At least the right wing talking heads are getting paid, these guys are just mad he did things instead of tweeting about things.

“My heavens, our self-regarding supremacist ideology can’t possibly imply violence… can it???”

Do you have a link? I’m interested. (Also, I see you posted something similar a couple hours before I did. Sorry I missed that!)

https://x.com/tracewoodgrains/status/1866197443404247185

https://x.com/st_rev/status/1866191524498719147

Sorry for not using nitter but im on phone.

From the replies:

I see the interest in AI, Peter Thiel, and the far future, but it’s a lot more rationality adjacent than EA.

“It’s a lot more country than western”

meanwhile his twitter:

The starving people are tied to the track, the trolley barreling down at them, they are screaming at the rich to release them or pull the lever, which they can’t, not without the rich guys help. But the rich are not helping. Why are the rich not helping Elon?

TWG: is a good reminder that atrocities don’t come from convenient avatars of all I oppose

Random bluecheck: On the contrary, this just reaffirms my biases that e/accs and AGI race-promoters are the source of every bad thing in the world.

TWG: was he e/acc?

We are in the ‘he only followed some e/acc, he never said he was e/acc’ phase. Wonder what his manifesto will say.

this whole exchange is so beautiful i love these stupid fucking idiots WHY ARE THEY EVERYWHERE

That conversation reads like from a variation of Mafia/Werewolf where you have to figure out who in your circle of rationalists is secretly e/acc and wants to build the torment nexus.

bwahahaha “most reasonable operationalizations; conditional upon being e/acc.” Why does this person make me think of nasal, vaguely whiny prequel Spock?

“Tensor Templar” lmao. Hey buddy, what did the Knights Templar do, besides banking?

The man who hypes up that higher intelligences can figure out laws and rules from data quicker and more thoroughly than lower intelligences defending e/acc accounts by going ‘well they never openly advocated for killing, so this is not a valid concern’. Did he forget he advocated for bombing datacenters? Guess he never read any science fiction where ‘“rational” leader accidentally inspires murderous cult’, By The God Emperor, somebody should write a science fiction novel about that. (E: hell, does he even get what accellerationism implies? Somebody reading between the lines and going 🎵 'So let the games begin, A heinous crime, a show of force, A murder would be nice of course’🎵 is not out of the question).

Hopefully it elaborates on whatever the fuck this is:

Modern Japanese urban environment is an evolutionary mismatch for the human animal.

The solution to falling birthdates isn’t immigration. It’s cultural.

Encourage natural human interaction, sex, physical fitness and spirituality:

- ban Tenga fleshlights and “Japan Real Hole” custom pornstar pocket pussies being sold in Don Quixote grocery stores

- replace conveyor belt sushi and restaurant vending machine ordering, with actual human interaction with a waiter

- replace 24/7 eSports cafes where young males earn false fitness signals via Tekken fighting and Overwatch shooting games, with athletics in school

- heavily stigmatize maid cafes where lonely salarymen pay young girls to dress as anime characters and perform anime dances for them

- revitalize traditional Japanese culture (Shintoism, Okinawan karate, onsen, etc)

If we couldn’t react with “wake up babe, new copypasta just dropped” or “tag yourself, I’m the false fitness signal in the maid café”, we couldn’t react to a lot of life.

being performatively worried about Japanese birth rates is a HN trope, for whatever that’s worth

i thought for some time now that problems with japanese society are things like stifling conformism, ridiculous degree of nationalism and sexism, how they are functionally an one-party state or stiff strictly hierarchial relationships that appear out of nowhere the entire time. but yeah definitely this seed oils level of conspiracy thinking is accepted truth on eacc twitter

it also sounds extremely specific

I dont know enough about Japan, but is it just me or is that a very specific fleshlight gripe? E: yes, I guess, this means I’m tagging myself as the fleshlight or something.

This reminded me that TWG has a Twitter account. I could have done without that reminder.

A few years ago, I would have pointed to Elon Musk as someone approximately where I was in the political spectrum. The left pushed him away, the right welcomed him, and he spent hundreds of millions of dollars and put in immense effort to elect Trump.

No, you embossed carbuncle. Apartheid boy was evil all along; you were just too media-illiterate to see through the propaganda.

The left made him call that guy a pedo in 2018, and tell his first wife he was the alpha in the relationship in 2000. Thanks Obama.

E: forgot about the ‘im actually a socialist, but with a more capitalist character’ tweet

The left did not push Elon Musk away. The left has fundamentally different interests and values to the billionaire and alleged rapist.

this guy nailing the UHC CEO is the only good thing they will ever have a chance of claiming credit for, so of course they rush to disavow him

lol that’s exquisite

but you could tell the guy was into Effective Altruism from how he did dust specks vs CEOs

@TinyTimmyTokyo @BlueMonday1984 lab-gown mest is fine tho. I’d be all over it if it ever works.

But yeah he’d fit right in on LW I’m sureEdited based on later chat: eh

https://med-mastodon.com/@noodlemaz/113641637798676074one of the best articles I’ve ever read was an inch by inch teardown of the entire concept of mass produced lab grown meat: https://thecounter.org/lab-grown-cultivated-meat-cost-at-scale/ . it’s never, ever going to work at scale and I’d go so far as to say it’s the food equivalent of all the usual tech grifts we talk about here

if luigi incident kicks off next miracle tech sv bubble after ai this would be the single dumbest outcome out of this entire situation

so far tpot got exposure and fake manifesto included altmed dogwhistle right in the title

@sc_griffith thanks, that is a cool piece! Indeed having done plenty cell culture myself and seeing those shockingly astronomical meat consumption figures, the solutions seem much clearer to me. I doubt LGM will be a useful reality in my lifetime, maybe far in the future. So, people need to stop eating meat, and eat plants instead.

Many are already doing so! I’ve mostly stopped with meat myself, just some fish to go.

But we need political will. Change of culture. Sanctions on the US, etc :/

@sc_griffith stood out:

“a mature, scaled-up industry could eventually achieve a ratio of only 3-4 calories in for every calorie out, compared to the chicken’s 10 and the steer’s 25. That would still make cultured meat much more inefficient compared to just eating plants themselves… And the cells themselves might still be fed on a diet of commodity grains, the cheapest and most environmentally destructive inputs available. But it would represent a major improvement.”@sc_griffith last one (probably) - as I’m now working quite a lot adjacent to philanthropists (and find the whole concept and reality of that deeply morally unpleasant on many levels), v interesting interweaving of that aspect.

And again, comes back to the EA nonsense.

Sigh

Same. I’m not being critical of lab-grown meat. I think it’s a great idea.

But the pattern of things he’s got an opinion on suggests a familiarity with rationalist/EA/accelerationist/TPOT ideas.

reddit just launched an LLM integrated into the site. hardly any point going through what garbage these things are at this point but of course it failed the first test I gave it