So we’re starting to get to the point where its theoretically possible for computers to get real organic viruses? “Sorry boss I cant work today my computer caught Covid and coughed on me so now I have it too :(”

Caught prions*

And that’s how BioSkynet starts.

And ends.

Don’t scare me like that

There was a documentary about this awhile ago which was pretty terrifying. They basically go into how you can essentially “grow” computers to augment reality and human perception. Pretty crazy. “eXistenz” was the name I think. I believe Jude Law was the narrator or something, I don’t remember.

I’m positive that David Cronenberg had no idea what a video game was when he made that movie

Weird. I just very recently tried hard to remember the name of this movie, for a completely different topic (teeth as ammo).

Great movie. DEATH TO EXISTENZ!

Very good movie

Way back in limewire era this was credited to NiN https://www.youtube.com/watch?v=hW_4mRJCatg – to this day i havent got to the bottom of it. I should watch the movie

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=hW_4mRJCatg

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

I’ve got just the thing

Get the cheese to sickbay!

Are you seriously ill, but don’t want to leave a ton of medical debts to your family?

Then donate your brain tissue to BrainCloud™! Instead of costing your family a lot of money, you might make them Millionaires* and also reduce CO2 emissions of world leading AI applications! Leaving a better world for our children!

And who knows, maybe you will even enjoy thinking about chat bot responses in weird nightmarish ways for the rest of what might seem like an eternity.~*We offer a donation compensation of up to $1.000.000. Actual rates depend on brain capabilities, size and constitution. Payouts are determined by our quality assurance team. Payouts are not guaranteed. In cases of brain tissue with insufficient quality, compensational fees for testing, lab work, and services may be charged to the donor’s family.~

Oh God, imagine your braincells being used to mine crypto.

You ‘pute 64 bits, whaddya get?

Just ‘nother load for your instruction set

Satoshi don’t call me cuz I can’t go

I sold my soul to the crypto bros

O no hes over heating!

Oh my god. That would be… no different than now for me 😒

I can definitely imagine your braincells being used to mine my crypto!

Are homeless people going to start mysteriously disappearing now

Don’t need the homeless. You can pluck a hair, donate your blood, or even take a plug of your foreskin if you have one, to generate the neural stem cells from iPSC, the cell type they use in this process.

if you have one.

😔

a plug of your foreskin

Can you use them to grow more dicks on your body like hair transplants?

damn, blood it is then

Nah. No way. I don’t want my computer asking me for vodka…

deleted by creator

IIRC these organoids also die after somewhere around 100 days of hypoxia, because they have yet to be able to construct a proper circulatory system for them.

Oh, a CPU that straight up expires? A product that comes with enshittification built in from the start? Corporations’ mouths are watering as we speak.

In about a month lemmy will discover that human beings die and will complain about the enshittification of life.

Enshittification - a pattern of decreasing quality.

Why life expectancy in the US is falling.

Declining Health-Related Quality of Life in the U.S..

The enshittification of life is real.

There are like 200 countries in the world that are not the US.

That can’t be right.

Pff, the enshittification of life would be if it just kept on going. Thankfully the misery will end at some point.

Is there a transhumanist sub?

This is still experimental. There’s not even the slightest glimmer of a product in this yet.

That didn’t stop everyone from jumping on GPT, either.

Not sure how using a free service is the same as using human neurons as a processor.

Pfft, like that ever stopped them.

“Early release here we come!”

It’s not a product yet in part because it dies in 100 days.

That would be a much older thing, planned obsolescence

also they only feel pain suffering for every second of their miserable existance. They welcome the cold embrace of the void.

this reminds me of a story about someone who couldn’t talk but they had to scream, i think it was called, “the guy who stubbed his toe in the library”

Darkness…imprisoning me…all that I see…absolute horror

I cannot live…I cannot die…trapped in myself…body, my holding ceeeEEEEELLLLL

So basically a first trimester abortion. Will these be available in Texas?

Is this legit? This is the first time I’ve heard of human neurons used for such a purpose. Kind of surprised that’s legal. Instinctively, I feel like a “human brain organoid” is close enough to a human that you cannot wave away the potential for consciousness so easily. At what point does something like this deserve human rights?

I notice that the paper is published in Frontiers, the same journal that let the notorious AI-generated giant-rat-testicles image get published. They are not highly regarded in general.

They don’t really go into the size of the organoid, but it’s extremely doubtful that it’s large and complex enough to get anywhere close to consciousness.

There’s also no guarantee that a lump of brain tissue could ever achieve consciousness, especially if the architecture is drastically different from an actual brain.

Well, we haven’t solved the hard problem of consciousness, so we don’t know if size of brain or similarity to human brain are factors for developing consciousness. But perhaps a more important question is, if it did develop consciousness, how much pain would it experience?

Physical pain? Zero.

Now emotional pain? I’m not sure it would even be able to accomplish emotional pain. So much of our emotions are intertwined with chemical balances and releases. If a brain achieved consciousness, but had none of these chemicals at all…I don’t know that’d even work.

While we haven’t confirmed this experimentally (ominous voice: yet), computationally there’s no reason even a simple synthetic brain couldn’t experience emotions. Chemical neurotransmitters are just an added layer of structural complexity so Church–Turing will still hold true. Human brains are only powerful because they have an absurdly high parallel network throughput rate (computational bus might be a better term), the actual neuron part is dead simple. Network computation is fascinating, but much like linear algebra the actual mechanisms are so simple they’re dead boring - but if you cram 200,000,000 of those mechanisms into a salty water balloon it can produce some really pompus lemmy comments.

Emotions are holographic anyways so the question is kinda meaningless. It’s like asking if an artificial brain will perceive the color green as the same color we ‘see’ as green. It sounds deep until you realize it’s all fake, man. It’s all fake.Wake up, neo…

I’m not sure what color skittles I ate, but im feeling…horny.

Physical pain? Zero.

Did you think about this before you wrote it?

Didn’t have to. Kind of an obvious thing to point out, but OP didn’t specify what type of pain he meant, so I figured I would, just in case.

How is it obvious?

Human brains don’t actually have any pain receptors (even though headaches would have you seriously believe otherwise), so a brain alone wouldn’t be able to feel pain any more than it would be able to smell or see.

Physical pain only exists from nerves. Brains don’t have any nerves. No nerves. No pain.

Believe it or not, I studied this in school. There’s some niche applications for alternative computers like this. My favorite is the way you can use DNA to solve the traveling salesman problem (https://en.wikipedia.org/wiki/DNA_computing?wprov=sfla1)

There have been other “bioprocessors” before this one, some of which have used neurons for simple image detection, e.g https://ieeexplore.ieee.org/abstract/document/1396377?casa_token=-gOCNaYaKZIAAAAA:Z0pSQkyDBjv6ITghDSt5YnbvrkA88fAfQV_ISknUF_5XURVI5N995YNaTVLUtacS7cTsOs7o. But this seems to be the first commercial application. Yes, it’ll use less energy, but the applications will probably be equally as niche. Artificial neural networks can do most of the important parts (like “learn” and “rememeber”) and are less finicky to work with.

Careful. Get too deep into that and people will have to admit lesser animals have forms of consciousness.

Seems like it’s an ethical gray area. Some brain organoid have responded to light stimulus and there are concerns they might be able to feel pain or develop consciousness. (Full disclosure, I had no idea what an organoid even was before reading this and then did some quick follow up reading)

How complex does a neural net have to be before you can call any of its outputs ‘pain’?

Start with a lightswitch with ‘pain’ written on a post-it note stuck to the on position, end with a toddler. Where’s the line?

I think that’s why it’s a gray area. No one knows

I’m concerned about what kind of hardware is sold on tomshardware

Tom’s, of course

If you wanted Pete’s, shoulda went to peteshardware 🤷

Turns out the origin of borgs was actually earth!

“LOBOTOMITE!”

I have very good raisins for everything that I do

Organoids is such a fun word to say.

The Return of The Organoids

I also like to play around with it.

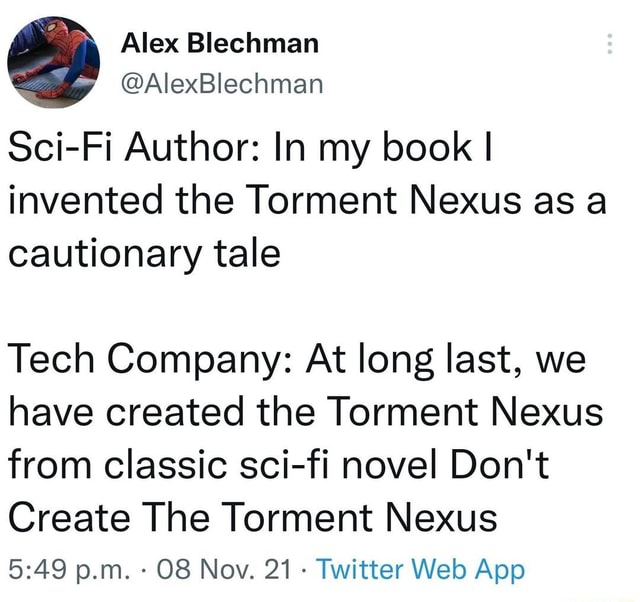

People seeing scifi works as somehow prophetic is something I will never understand…

Prophetic? Fuck no. Cautionary tales that unfortunately turn into self-fulfilling prophecies? That’s a different story

Scifi is kind of reverse prophetic, a lot of people become scientists because they were into scifi and at the end of the day we need to imagine something is possible before we can invent it.

Article claims they are human brain organoids, doesn’t say where the source of them is. Are these grown, like most other neural computing systems, or are they actually taking matter from a human brain?

Organoids are largely homogenous lab-grown mini-organs.

So is it fair to call them human or is that just sensationalism in the article?

They are neurons derived and grown from human skin cells iirc, so, kinda?

It’s because they’re human cells, as opposed to being rat cells or something

Not only is what I’m hearing.

I think the “largely” only refers to the homogeneous part. I hope it does

Here’s a video that starts with a good general overview of brain organoids:

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=x1Pg56WWm5U

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

pffft good luck getting my brain organoids to do your bidding… I am deeply tarded

It could be penis cells for all we know.

You misspelled ‘should.’

Nanotechnology, finally my penis will be heralded as a hero!

They could be guiding bombs with this technology and it would be immune to Electromagnetic attacks.

Begun, the Bone Wars have.

Stop giving them ideas.

it wouldn’t* because the connections to the parts would still have wires which would fry the lil guys

You know I was commenting sarcastically.

Why am I remembering that video with Ukrainian (I think) soldiers arming a custom-made bomb (for a copter) with schrapnel in the form of little dicks?

Worse at approximation but more deterministic.

And CEOs should love it since they only go up.

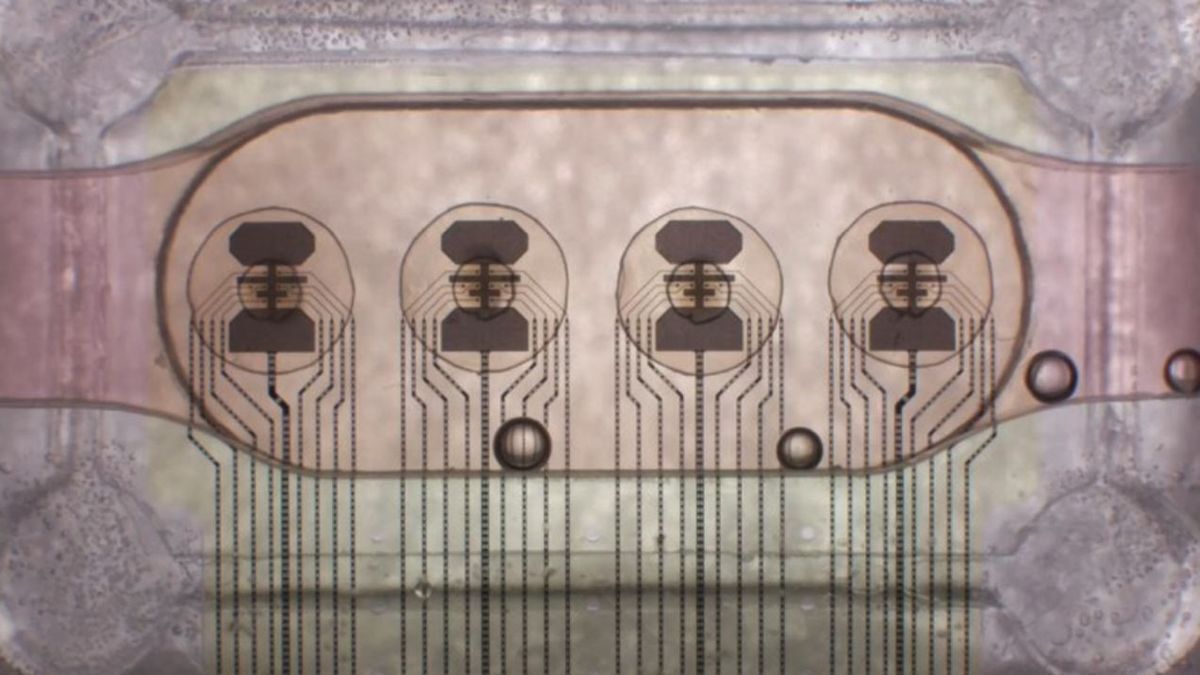

Some cells get taken from you and turned into stem cells.

These are converted into brain cells, and nerve cells, on a chip that represents the scaffolding, interface, and connectivity.

Then the whole ‘organ-device’ gets surgically installed into your brain, and through gene therapy, the brain cells grow into, connect with and network into your existing tissue.

They have to use STEM cells because other kinds of cells are bad at math.

Boo this man!

And then every time you sneeze, you end up ordering another case of diapers from Amazon.

We’re getting closer to the Imperium of Man every day.

At least we get the Golden Age of Technology first.

Just ten thousand years to go!

If this works, it’s noteworthy. I don’t know if similar results have been achieved before because I don’t follow developments that closely, but I expect that biological computing is going to catch a lot more attention in the near-to-mid-term future. Because of the efficiency and increasingly tight constraints imposed on humans due to environmental pressure, I foresee it eventually eclipse silicon-based computing.

FinalSpark says its Neuroplatform is capable of learning and processing information

They sneak that in there as if it’s just a cool little fact, but this should be the real headline. I can’t believe they just left it at that. Deep learning can not be the future of AI, because it doesn’t facilitate continuous learning. Active inference is a term that will probably be thrown about a lot more in the coming months and years, and as evidenced by all kinds of living things around us, wetware architectures are highly suitable for the purpose of instantiating agents doing active inference.

tbh this research has been ongoing for a while. this guy has been working on this problem for years in his homelab. it’s also known that this could be a step toward better efficiency.

this definitely doesn’t spell the end of digital electronics. at the end of the day, we’re still going to want light switches, and it’s not practical to have a butter spreading robot that can experience an existential crisis. neural networks, both organic and artificial, perform more or less the same function: given some input, predict an output and attempt to learn from that outcome. the neat part is when you pile on a trillion of them, you get a being that can adapt to scenarios it’s not familiar with efficiently.

you’ll notice they’re not advertising any experimental results with regard to prediction benchmarks. that’s because 1) this actually isn’t large scale enough to compete with state of the art ANNs, 2) the relatively low resolution (16 bit) means inputs and outputs will be simple, and 3) this is more of a SaaS product than an introduction to organic computing as a concept.

it looks like a neat API if you want to start messing with these concepts without having to build a lab.

Here is an alternative Piped link(s):

this guy has been working on this problem for years in his homelab

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

It’s been in development for a while: https://ieeexplore.ieee.org/abstract/document/1396377?casa_token=-gOCNaYaKZIAAAAA:Z0pSQkyDBjv6ITghDSt5YnbvrkA88fAfQV_ISknUF_5XURVI5N995YNaTVLUtacS7cTsOs7o

Even before the above paper, I recall efforts to connect (rat) brains to computers in the late 90s/early 2000s. https://link.springer.com/article/10.1023/A:1012407611130